Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2126 2024-04-21 00:06:32

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

2128) Talk show

Gist

A talk show is a chat show, especially one in which listeners, viewers, or the studio audience are invited to participate in the discussion.

Summary

Talk show, radio or television program in which a well-known personality interviews celebrities and other guests. The late-night television programs hosted by Johnny Carson, Jay Leno, David Letterman, and Conan O’Brien, for example, emphasized entertainment, incorporating interludes of music or comedy. Other talk shows focused on politics (see David Susskind), controversial social issues or sensationalistic topics (Phil Donahue), and emotional therapy (Oprah Winfrey).

Details

A talk show (sometimes chat show in British English) is a television programming, radio programming or Podcast genre structured around the act of spontaneous conversation. A talk show is distinguished from other television programs by certain common attributes. In a talk show, one person (or group of people or guests) discusses various topics put forth by a talk show host. This discussion can be in the form of an interview or a simple conversation about important social, political or religious issues and events. The personality of the host shapes the tone and style of the show. A common feature or unwritten rule of talk shows is to be based on "fresh talk", which is talk that is spontaneous or has the appearance of spontaneity.

The history of the talk show spans back from the 1950s to the present.

Talk shows can also have several different subgenres, which all have unique material and can air at different times of the day via different avenues.

Attributes

Beyond the inclusion of a host, a guest(s), and a studio or call-in audience, specific attributes of talk shows may be identified:

* Talk shows focus on the viewers—including the participants calling in, sitting in a studio or viewing from online or on TV.

* Talk shows center around the interaction of guests with opposing opinions and/or differing levels of expertise, which include both experts and non-experts.

* Although talk shows include guests of various expertise levels, they often cater to the credibility of one's life experiences as opposed to educational expertise.

* Talk shows involve a host responsible for furthering the agenda of the show by mediating, instigating and directing the conversation to ensure the purpose is fulfilled. The purpose of talk shows is to either address or bring awareness to conflicts, to provide information, or to entertain.

* Talk shows consist of evolving episodes that focus on differing perspectives in respect to important issues in society, politics, religion or other popular areas.

* Talk shows are produced at low cost and are typically not aired during prime time.

* Talks shows are either aired live or are recorded live with limited post-production editing.

Subgenres

There are several major formats of talk shows. Generally, each subgenre predominates during a specific programming block during the broadcast day.

* Breakfast chat or early morning shows that generally alternate between news summaries, political coverage, feature stories, celebrity interviews, and musical performances.

* Late morning chat shows that feature two or more hosts or a celebrity panel and focus on entertainment and lifestyle features.

* Daytime tabloid talk shows that generally feature a host, a guest or a panel of guests, and a live audience that interacts extensively with the host and guests. These shows may feature celebrities, political commentators, or "ordinary" people who present unusual or controversial topics.

* "Lifestyle" or self-help programs that generally feature a host or hosts of medical practitioners, therapists, or counselors and guests who seek intervention, describe medical or psychological problems, or offer advice. An example of this type of subgenre is The Oprah Winfrey Show, although it can easily fit into other categories as well.

* Evening panel discussion shows that focus on news, politics, or popular culture (such as the former UK series After Dark which was broadcast "late-night").

* Late-night talk shows that focus primarily on topical comedy and variety entertainment. Most traditionally open with a monologue by the host, with jokes relating to current events. Other segments typically include interviews with celebrity guests, recurring comedy sketches, as well as performances by musicians or other stand-up comics.

* Sunday morning talk shows are a staple of network programming in North America, and generally focus on political news and interviews with elected political figures and candidates for office, commentators, and journalists.

* Aftershows that feature in-depth discussion about a program on the same network that aired just before (for example, Talking Dead).

* Spoof talk shows, such as Space Ghost Coast to Coast, Tim and Eric Nite Live, Comedy Bang! Bang!, and The Eric Andre Show, that feature interviews that are mostly scripted, shown in a humorous and satirical way, or engages in subverting the norms of the format (particularly that of late-night talk shows).

These formats are not absolute; some afternoon programs have similar structures to late-night talk shows. These formats may vary across different countries or markets. Late night talk shows are especially significant in the United States. Breakfast television is a staple of British television. The daytime talk format has become popular in Latin America as well as the United States.

These genres also do not represent "generic" talk show genres. "Generic" genres are categorized based on the audiences' social views of talks shows derived through their cultural identities, fondness, preferences and character judgements of the talk shows in question. The subgenres listed above are based on television programming and broadly defined based on the TV guide rather than on the more specific categorizations of talk show viewers. However, there is a lack of research on "generic" genres, making it difficult to list them here. According to Mittell, "generic" genres is of significant importance in further identifying talk show genres because with such differentiation in cultural preferences within the subgenres, a further distinction of genres would better represent and target the audience.

Talk-radio host Howard Stern also hosted a talk show that was syndicated nationally in the US, then moved to satellite radio's Sirius. The tabloid talk show genre, pioneered by Phil Donahue in 1967 but popularized by Oprah Winfrey, was extremely popular during the last two decades of the 20th century.

Politics are hardly the only subject of American talk shows, however. Other radio talk show subjects include Car Talk hosted by NPR and Coast to Coast AM hosted by Art Bell and George Noory which discusses topics of the paranormal, conspiracy theories, and fringe science. Sports talk shows are also very popular ranging from high-budget shows like The Best darn Sports Show Period to Max Kellerman's original public-access television cable TV show Max on Boxing.

History

Talk shows have been broadcast on television since the earliest days of the medium. Joe Franklin, an American radio and television personality, hosted the first television talk show. The show began in 1951 on WJZ-TV (later WABC-TV) and moved to WOR-TV (later WWOR-TV) from 1962 to 1993.

NBC's The Tonight Show is the world's longest-running talk show; having debuted in 1954, it continues to this day. The show underwent some minor title changes until settling on its current title in 1962, and despite a brief foray into a more news-style program in 1957 and then reverting that same year, it has remained a talk show. Ireland's The Late Late Show is the second-longest running talk show in television history, and the longest running talk show in Europe, having debuted in 1962.

Steve Allen was the first host of The Tonight Show, which began as a local New York show, being picked up by the NBC network in 1954. It in turn had evolved from his late-night radio talk show in Los Angeles. Allen pioneered the format of late night network TV talk shows, originating such talk show staples as an opening monologue, celebrity interviews, audience participation, and comedy bits in which cameras were taken outside the studio, as well as music, although the series' popularity was cemented by second host Jack Paar, who took over after Allen had left and the show had ceased to exist.

TV news pioneer Edward R. Murrow hosted a talk show entitled Small World in the late 1950s and since then, political TV talk shows have predominantly aired on Sunday mornings.

Syndicated daily talk shows began to gain more popularity during the mid-1970s and reached their height of popularity with the rise of the tabloid talk show. Morning talk shows gradually replaced earlier forms of programming — there were a plethora of morning game shows during the 1960s and early to mid-1970s, and some stations formerly showed a morning movie in the time slot that many talk shows now occupy.

Current late night talk shows such as The Tonight Show Starring Jimmy Fallon, Conan and The Late Show with Stephen Colbert have aired featuring celebrity guests and comedy sketches. Syndicated daily talk shows range from tabloid talk shows, such as Jerry Springer and Maury, to celebrity interview shows, like Live with Kelly and Ryan, Tamron Hall, Sherri, Steve Wilkos, The Jennifer Hudson Show and The Kelly Clarkson Show, to industry leader The Oprah Winfrey Show, which popularized the former genre and has been evolving towards the latter. On November 10, 2010, Oprah Winfrey invited several of the most prominent American talk show hosts - Phil Donahue, Sally Jessy Raphael, Geraldo Rivera, Ricki Lake, and Montel Williams - to join her as guests on her show. The 1990s in particular saw a spike in the number of "tabloid" talk shows, most of which were short-lived and are now replaced by a more universally appealing "interview" or "lifestyle TV" format.

Talk shows have more recently started to appear on Internet radio. Also, several Internet blogs are in talk show format including the Baugh Experience.

The current world record for the longest talk show is held by Rabi Lamichhane from Nepal by staying on air for 62 hours from April 11 to 13, 2013 breaking the previous record set by two Ukrainians by airing the show for 52 hours in 2011.

In 2020, the fear of the spread of the coronavirus led to large changes in the operation of talk shows, with many being filmed without live audiences to ensure adherence to the rules of social distancing. The inclusion of a live, participating audience is one of the attributes that contribute to the defining characteristics of talk shows. Operating without the interaction of viewers created difficult moments and awkward silences to hosts who usually used audience responses to transition conversations.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2127 2024-04-22 00:06:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

2129) Technocracy

Gist

a) a proponent, adherent, or supporter of technocracy.

b) a technological expert, especially one concerned with management or administration.

Summary

Technocracy, government by technicians who are guided solely by the imperatives of their technology. The concept developed in the United States early in the 20th century as an expression of the Progressive movement and became a subject of considerable public interest in the 1930s during the Great Depression. The origins of the technocracy movement may be traced to Frederick W. Taylor’s introduction of the concept of scientific management. Writers such as Henry L. Gannt, Thorstein Veblen, and Howard Scott suggested that businessmen were incapable of reforming their industries in the public interest and that control of industry should thus be given to engineers.

The much-publicized Committee on Technocracy, headed by Walter Rautenstrauch and dominated by Scott, was organized in 1932 in New York City. Scott proclaimed the invalidation, by technologically produced abundance, of all prior economic concepts based on scarcity; he predicted the imminent collapse of the price system and its replacement by a bountiful technocracy. Scott’s academic qualifications, however, were discredited in the press, some of the group’s data were questioned, and there were disagreements among members regarding social policy. The committee broke up within a year and was succeeded by the Continental Committee on Technocracy, which faded by 1936, and Technocracy, Inc., headed by Scott. Technocratic organizations sprang up across the United States and western Canada, but the technocracy movement was weakened by its failure to develop politically viable programs for change, and support was lost to the New Deal and third-party movements. There were also fears of authoritarian social engineering. Scott’s organization declined after 1940 but still survived in the late 20th century.

Details

What Is Technocracy?

A technocracy is a model of governance wherein decision-makers are chosen for office based on their technical expertise and background. A technocracy differs from a traditional democracy in that individuals selected to a leadership role are chosen through a process that emphasizes their relevant skills and proven performance, as opposed to whether or not they fit the majority interests of a popular vote.

The individuals that occupy such positions in a technocracy are known as "technocrats." An example of a technocrat could be a central banker who is a trained economist and follows a set of rules that apply to empirical data.

KEY TAKEAWAYS

* A technocracy is a form of governance whereby government officials or policymakers, known as technocrats, are chosen by some higher authority due to their technical skills or expertise in a specific domain.

* Decisions made by technocrats are supposed to be based on information derived from data and objective methodology, rather than opinion or self-interest.

* Critics complain that technocracy is undemocratic and disregards the will of the people.

How Technocracy Works

A technocracy is a political entity ruled by experts (technocrats) that are selected or appointed by some higher authority. Technocrats are, supposedly, selected specifically for their expertise in the area over which they are delegated authority to govern. In practice, because technocrats must always be appointed by some higher authority, the political structure and incentives that influence that higher authority will always also play some role in the selection of technocrats.

An official who is labeled as a technocrat may not possess the political savvy or charisma that is typically expected of an elected politician. Instead, a technocrat may demonstrate more pragmatic and data-oriented problem-solving skills in the policy arena.

Technocracy became a popular movement in the United States during the Great Depression when it was believed that technical professionals, such as engineers and scientists, would have a better understanding than politicians regarding the economy's inherent complexity.

Although democratically officials may hold seats of authority, most come to rely on the technical expertise of select professionals in order to execute their plans.

Defense measures and policies in government are often developed with considerable consultation with military personnel to provide their firsthand insight. Medical treatment decisions, meanwhile, are based heavily on the input and knowledge of physicians, and city infrastructures could not be planned, designed, or constructed without the input of engineers.

Critiques of Technocracy

Reliance on technocracy can be criticized on several grounds. The acts and decisions of technocrats can come into conflict with the will, rights, and interests of the people whom they rule over. This in turn has often led to populist opposition to both specific technocratic policy decisions and to the degree of power in general granted to technocrats. These problems and conflicts help give rise to the populist concept of the "deep state", which consists of a powerful, entrenched, unaccountable, and oligarchic technocracy which governs in its own interests.

In a democratic society, the most obvious criticism is that there is an inherent tension between technocracy and democracy. Technocrats often may not follow the will of the people because by definition they may have specialized expertise that the general population lacks. Technocrats may or may not be accountable to the will of the people for such decisions.

In a government where citizens are guaranteed certain rights, technocrats may seek to encroach upon these rights if they believe that their specialized knowledge suggests that it is appropriate or in the larger public interest. The focus on science and technical principles might also be seen as separate and disassociated from the humanity and nature of society. For instance, a technocrat might make decisions based on calculations of data rather than the impact on the populace, individuals, or groups within the population.

In any government, regardless of who appoints the technocrats or how, there is always a risk that technocrats will engage in policymaking that favors their own interests or others whom they serve over the public interest. Technocrats are necessarily placed in a position of trust, since the knowledge used to enact their decisions is to some degree inaccessible or not understandable to the general public. This creates a situation where there can be a high risk of self-dealing, collusion, corruption, and cronyism. Economic problems such as rent-seeking, rent-extraction, or regulatory capture are common in technocracy.

Trade on the Go. Anywhere, Anytime

One of the world's largest crypto-asset exchanges is ready for you. Enjoy competitive fees and dedicated customer support while trading securely. You'll also have access to Binance tools that make it easier than ever to view your trade history, manage auto-investments, view price charts, and make conversions with zero fees. Make an account for free and join millions of traders and investors on the global crypto market.

Additional Information

Technocracy is a form of government in which the decision-makers are selected based on their expertise in a given area of responsibility, particularly with regard to scientific or technical knowledge. Technocracy follows largely in the tradition of other meritocracy theories and assumes full state control over political and economic issues.

This system explicitly contrasts with representative democracy, the notion that elected representatives should be the primary decision-makers in government, though it does not necessarily imply eliminating elected representatives. Decision-makers are selected based on specialized knowledge and performance rather than political affiliations, parliamentary skills, or popularity.

The term technocracy was initially used to signify the application of the scientific method to solving social problems. In its most extreme form, technocracy is an entire government running as a technical or engineering problem and is mostly hypothetical. In more practical use, technocracy is any portion of a bureaucracy run by technologists. A government in which elected officials appoint experts and professionals to administer individual government functions, and recommend legislation, can be considered technocratic. Some uses of the word refer to a form of meritocracy, where the ablest are in charge, ostensibly without the influence of special interest groups. Critics have suggested that a "technocratic divide" challenges more participatory models of democracy, describing these divides as "efficacy gaps that persist between governing bodies employing technocratic principles and members of the general public aiming to contribute to government decision making".

History of the term

The term technocracy is derived from the Greek words, tekhne meaning skill and κράτος, kratos meaning power, as in governance, or rule. William Henry Smyth, a California engineer, is usually credited with inventing the word technocracy in 1919 to describe "the rule of the people made effective through the agency of their servants, the scientists and engineers", although the word had been used before on several occasions. Smyth used the term Technocracy in his 1919 article "'Technocracy'—Ways and Means to Gain Industrial Democracy" in the journal Industrial Management. Smyth's usage referred to Industrial democracy: a movement to integrate workers into decision-making through existing firms or revolution.

In the 1930s, through the influence of Howard Scott and the technocracy movement he founded, the term technocracy came to mean 'government by technical decision making', using an energy metric of value. Scott proposed that money be replaced by energy certificates denominated in units such as ergs or joules, equivalent in total amount to an appropriate national net energy budget, and then distributed equally among the North American population, according to resource availability.

There is in common usage found the derivative term technocrat. The word technocrat can refer to someone exercising governmental authority because of their knowledge, "a member of a powerful technical elite", or "someone who advocates the supremacy of technical experts". McDonnell and Valbruzzi define a prime minister or minister as a technocrat if "at the time of their appointment to government, they: have never held public office under the banner of a political party; are not a formal member of any party; and are said to possess recognized non-party political expertise which is directly relevant to the role occupied in government". In Russia, the President of Russia has often nominated ministers based on technical expertise from outside political circles, and these have been referred to as "technocrats".

Characteristics

Technocrats are individuals with technical training and occupations who perceive many important societal problems as being solvable with the applied use of technology and related applications. The administrative scientist Gunnar K. A. Njalsson theorizes that technocrats are primarily driven by their cognitive "problem-solution mindsets" and only in part by particular occupational group interests. Their activities and the increasing success of their ideas are thought to be a crucial factor behind the modern spread of technology and the largely ideological concept of the "information society". Technocrats may be distinguished from "econocrats" and "bureaucrats" whose problem-solution mindsets differ from those of the technocrats.

Examples

The former government of the Soviet Union has been referred to as a technocracy. Soviet leaders like Leonid Brezhnev often had a technical background. In 1986, 89% of Politburo members were engineers.

Leaders of the Chinese Communist Party used to be mostly professional engineers. According to surveys of municipal governments of cities with a population of 1 million or more in China, it has been found that over 80% of government personnel had a technical education. Under the five-year plans of the People's Republic of China, projects such as the National Trunk Highway System, the China high-speed rail system, and the Three Gorges Dam have been completed. During China's 20th National Congress, a class of technocrats in finance and economics are replaced in favor of high-tech technocrats.

In 2013, a European Union library briefing on its legislative structure referred to the Commission as a "technocratic authority", holding a "legislative monopoly" over the EU lawmaking process. The briefing suggests that this system, which elevates the European Parliament to a vetoing and amending body, was "originally rooted in the mistrust of the political process in post-war Europe". This system is unusual since the Commission's sole right of legislative initiative is a power usually associated with Parliaments.

Several governments in European parliamentary democracies have been labelled 'technocratic' based on the participation of unelected experts ('technocrats') in prominent positions. Since the 1990s, Italy has had several such governments (in Italian, governo tecnico) in times of economic or political crisis, including the formation in which economist Mario Monti presided over a cabinet of unelected professionals. The term 'technocratic' has been applied to governments where a cabinet of elected professional politicians is led by an unelected prime minister, such as in the cases of the 2011-2012 Greek government led by economist Lucas Papademos and the Czech Republic's 2009–2010 caretaker government presided over by the state's chief statistician, Jan Fischer. In December 2013, in the framework of the national dialogue facilitated by the Tunisian National Dialogue Quartet, political parties in Tunisia agreed to install a technocratic government led by Mehdi Jomaa.

The article "Technocrats: Minds Like Machines" states that Singapore is perhaps the best advertisement for technocracy: the political and expert components of the governing system there seem to have merged completely. This was underlined in a 1993 article in "Wired" by Sandy Sandfort, where he describes the information technology system of the island even at that early date making it effectively intelligent.

Engineering

Following Samuel Haber, Donald Stabile argues that engineers were faced with a conflict between physical efficiency and cost efficiency in the new corporate capitalist enterprises of the late nineteenth-century United States. Because of their perceptions of market demand, the profit-conscious, non-technical managers of firms where the engineers work often impose limits on the projects that engineers desire to undertake.

The prices of all inputs vary with market forces, thereby upsetting the engineer's careful calculations. As a result, the engineer loses control over projects and must continually revise plans. To maintain control over projects, the engineer must attempt to control these outside variables and transform them into constant factors.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2128 2024-04-22 22:52:21

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

2130) Hairdresser

Gist

A hairdresser is a person who cuts people's hair and puts it into a style, usually working in a special shop, called a hairdresser's.

Summary

A hairdresser's job is to organise hair into a particular style or "look". They can cut hair, add colour to it or texture it. A hairdresser may be female or male. Qualified staff are usually called "stylists", who are supported by assistants. Most hairdressing businesses are unisex, that is, they serve both sexes, and have both sexes on their staff.

Male hairdressers who simply cut men's hair (and do not serve females) are often called barbers.

Qualifications for hairdressing usually mean a college course, or an apprenticeship under a senior stylist. Some aspects of the job are quite technical (such as hair dying) and require careful teaching.

Details

A hairdresser is a person whose occupation is to cut or style hair in order to change or maintain a person's image. This is achieved using a combination of hair coloring, haircutting, and hair texturing techniques. A hairdresser may also be referred to as a 'barber' or 'hairstylist'.

History:

Ancient hairdressing

Hairdressing as an occupation dates back thousands of years. Both Aristophanes and Homer, Greek writers, mention hairdressing in their writings. Many Africans believed that hair is a method to communicate with the Divine Being. It is the highest part of the body and therefore the closest to the divine. Because of this Hairdressers held a prominent role in African communities. The status of hairdressing encouraged many to develop their skills, and close relationships were built between hairdressers and their clients. Hours would be spent washing, combing, oiling, styling and ornamenting their hair. Men would work specifically on men, and women on other women. Before a master hairdresser died, they would give their combs and tools to a chosen successor during a special ceremony.

In ancient Egypt, hairdressers had specially decorated cases to hold their tools, including lotions, scissors and styling materials. Barbers also worked as hairdressers, and wealthy men often had personal barbers within their home. With the standard of wig wearing within the culture, wigmakers were also trained as hairdressers. In ancient Rome and Greece household slaves and servants took on the role of hairdressers, including dyeing and shaving. Men who did not have their own private hair or shaving services would visit the local barbershop. Women had their hair maintained and groomed at their homes. Historical documentation is lacking regarding hairstylists from the 5th century until the 14th century. Hair care service grew in demand after a papal decree in 1092 demanded that all Roman Catholic clergymen remove their facial hair.

Europe

The first appearance of the word "hairdresser" is in 17th century Europe, and hairdressing was considered a profession. Hair fashion of the period suggested that wealthy women wear large, complex and heavily adorned hairstyles, which would be maintained by their personal maids and other people, who would spend hours dressing the woman's hair. A wealthy man's hair would often be maintained by a valet. It was in France where men began styling women's hair for the first time, and many of the notable hairdressers of the time were men, a trend that would continue into contemporary times. The first famous male hairdresser was Champagne, who was born in Southern France. Upon moving to Paris, he opened his own hair salon and dressed the hair of wealthy Parisian women until his death in 1658.

Women's hair grew taller in style during the 17th century, popularized by the hairdresser Madame Martin. The hairstyle, "the tower," was the trend with wealthy English and American women, who relied on hairdressers to style their hair as tall as possible. Tall piles of curls were pomaded, powdered and decorated with ribbons, flowers, lace, feathers and jewelry. The profession of hairdressing was launched as a genuine profession when Legros de Rumigny was declared the first official hairdresser of the French court. In 1765 de Rumigny published his book Art de la Coiffure des Dames, which discussed hairdressing and included pictures of hairstyles designed by him. The book was a best seller amongst Frenchwomen, and four years later de Rumigny opened a school for hairdressers: Academie de Coiffure. At the school he taught men and women to cut hair and create his special hair designs.

By 1777, approximately 1,200 hairdressers were working in Paris. During this time, barbers formed unions, and demanded that hairdressers do the same. Wigmakers also demanded that hairdressers cease taking away from their trade, and hairdressers responded that their roles were not the same, hairdressing was a service, and wigmakers made and sold a product. de Rumigny died in 1770 and other hairdressers gained in popularity, specifically three Frenchmen: Frederic, Larseueur, and Léonard. Leonard and Larseueur were the stylists for Marie Antoinette. Leonard was her favorite, and developed many hairstyles that became fashion trends within wealthy Parisian circles, including the loge d'opera, which towered five feet over the wearer's head. During the French Revolution he escaped the country hours before he was to be arrested, alongside the king, queen, and other clients. Léonard emigrated to Russia, where he worked as the premier hairdresser for Russian nobility.

19th century

Parisian hairdressers continued to develop influential styles during the early 19th century. Wealthy French women would have their favorite hairdressers style their hair from within their own homes, a trend seen in wealthy international communities. Hairdressing was primarily a service affordable only to those wealthy enough to hire professionals or to pay for servants to care for their hair. In the United States, Marie Laveau was one of the most famous hairdressers of the period. Laveau, located in New Orleans, began working as a hairdresser in the early 1820s, maintaining the hair of wealthy women of the city. She was a voodoo practitioner, called the "Voodoo Queen of New Orleans," and she used her connections to wealthy women to support her religious practice. She provided "help" to women who needed it for money, gifts and other favors.

French hairdresser Marcel Grateau developed the "Marcel wave" in the late part of the century. His wave required the use of a special hot hair iron and needed to be done by an experienced hairdresser. Fashionable women asked to have their hair "marceled." During this period, hairdressers began opening salons in cities and towns, led by Martha Matilda Harper, who developed one of the first retail chains of hair salons, the Harper Method.

20th century

Beauty salons became popularized during the 20th century, alongside men's barbershops. These spaces served as social spaces, allowing women to socialize while having their hair done and other services such as facials. Wealthy women still had hairdressers visit their home, but, the majority of women visited salons for services, including high-end salons such as Elizabeth Arden's Red Door Salon.

Major advancements in hairdressing tools took place during this period. Electricity led to the development of permanent wave machines and hair dryers. These tools allowed hairdressers to promote visits to their salons, over limited service in-home visits. New coloring processes were developed, including those by Eugène Schueller in Paris, which allowed hairdressers to perform complicated styling techniques. After World War I, the bob cut and the shingle bob became popular, alongside other short haircuts. In the 1930s complicated styles came back into fashion, alongside the return of the Marcel wave. Hairdressing was one of the few acceptable professions during this time for women, alongside teaching, nursing and clerical work.

Modern hairdressing:

Specialties

Some hairstylists specialize in particular services, such as colorists, who specialize in coloring hair.

By country:

United States

Occupationally, hairdressing is expected to grow faster than the average for all other occupations, at 20%. A state license is required for hairdressers to practice, with qualifications varying from state to state. Generally a person interested in hairdressing must have a high school diploma or GED, be at least 16 years of age, and have graduated from a state-licensed barber or cosmetology school. Full-time programs often last 9 months or more, leading to an associate degree. After students graduate from a program, they take a state licensing exam, which often consists of a written test, and a practical test of styling or an oral exam. Hairdressers must pay for licenses, and occasionally licenses must be renewed. Some states allow hairdressers to work without obtaining a new license, while others require a new license. About 44% of hairdressers are self-employed, often putting in 40-hour work weeks, and even longer among the self-employed. In 2008, 29% of hairstylists worked part-time, and 14% had variable schedules. As of 2008, people working as hairdressers totaled about 630,700, with a projected increase to 757,700 by 2018.

Occupational health hazards

Like many occupations, hairdressing is associated with potential health hazards stemming from the products workers use on the job as well as the environment they work in. Exposure risks are highly variable throughout the profession due to differences in the physical workspace, such as use of proper ventilation, as well as individual exposures to various chemicals throughout one's career. Hairdressers encounter a variety of chemicals on the job due to handling products such as shampoos, conditioners, sprays, chemical straighteners, permanent curling agents, bleaching agents, and dyes. While the U.S. Food and Drug Administration does hold certain guidelines over cosmetic products, such as proper labeling and provisions against adulteration, the FDA does not require approval of products prior to being sold to the public. This leaves opportunity for variations in product formulation, which can make occupational exposure evaluation challenging. However, there are certain chemicals that are commonly found in products used in hair salons and have been the subject of various occupational hazard studies.

Formaldehyde

Formaldehyde is a chemical used in various industries and has been classified by the International Agency for Research on Cancer or IARC as “carcinogenic to humans”. The presence of formaldehyde and methylene glycol, a formaldehyde derivative, have been found in hair smoothing products, such as the Brazilian Blowout. The liquid product is applied to the hair, which is then dried using a blow dryer. Simulation studies as well as observational studies of working salons have shown formaldehyde levels in the air that meet and exceed occupational exposure limits. Variations in observed levels are a function of ventilation used in the workplace as well as the levels of formaldehyde, and its derivatives, in the product itself.

Aromatic amines

Aromatic amines are a broad class of compounds containing an amine group attached to an aromatic ring. IARC has categorized most aromatic amines as known carcinogens. Their use spans several industries including use in pesticides, medications, and industrial dyes. Aromatic amines have also been found in oxidative (permanent) hair dyes; however due to their potential for carcinogenicity, they were removed from most hair dye formulations and their use was completely banned in the European Union.

Phthalates

Phthalates are a class of compounds that are esters of phthalic acid. Their main use has been as plasticizers, additives to plastic products to change certain physical characteristics. They have also been widely used in cosmetic products as preservatives, including shampoos and hair sprays. Phthalates have been implicated as endocrine disrupting chemicals, compounds that mimic the body's own hormones and can lead to dysregulation of the reproductive and neurologic systems as well as changes in metabolism and cell proliferation.

Health considerations:

Reproductive

Most hairdressers are women of childbearing age, which lends to additional considerations for potential workplace exposures and the risks they may pose. There have been studies linking mothers who are hairdressers with adverse birthing outcomes such as low birth weight, preterm delivery, perinatal death, and neonates who are small for gestational age. However, these studies failed to show a well-defined association between individual risk factors and adverse birthing outcomes. Other studies have also indicated a correlation between professional hairdressing and menstrual dysfunction as well as subfertility. However, subsequent studies did not show similar correlations. Due to such inconsistencies, further research is required.

Oncologic

The International Agency for Research on Cancer or IARC, has categorized occupational exposures of hairdressers and barbers to chemical agents found in the workplace as “probably carcinogenic to humans” or category 2A in their classification system. This is due in part to the presence of chemical compounds historically found in hair products that have exhibited mutagenic and carcinogenic effects in animal and in vitro studies. However, the same consistent effects have yet to be fully determined in humans. There have been studies showing a link between occupational exposure to hair dyes and increased risk of bladder in male hairdressers but not females. Other malignancies such as ovarian, breast and lung cancers have also been studied in hairdressers, but the outcomes of these studies were either inconclusive due to potential confounding or did not exhibit an increase in risk.

Respiratory

Volatile organic compounds have been shown to be the largest inhalation exposure in hair salons, with the greatest concentrations occurring while mixing hair dyes and with use of hair sprays. Other notable respiratory exposures included ethanol, ammonia, and formaldehyde. The concentration of exposure was generally found to be a function of the presence or absence of ventilation in the area in which they were working. Studies have exhibited an increased rate of respiratory symptoms experienced such as cough, wheezing, rhinitis, and shortness of breath among hairdressers when compared to other groups. Decreased lung function levels on spirometry have also been demonstrated in hairdressers when compared to unexposed reference groups.

Dermal

Contact dermatitis is a common dermatological diagnosis affecting hairdressers. Allergen sensitization has been considered the main cause for most cases of contact dermatitis in hairdressers, as products such as hair dyes and bleaches, as well as permanent curling agents contain chemicals that are known sensitizers. Hairdressers also spend a significant amount of time engaging in wet work with their hands being directly immersed in water or by handling of wet hair and tools. Overtime, this type of work has also been implicated in increased rate of irritant dermatitis among hairdressers due to damage of the skins natural protective barrier

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2129 2024-04-24 00:08:56

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

2131) Amputation

Gist

Amputation is the loss or removal of a body part such as a finger, toe, hand, foot, arm or leg. It can be a life changing experience affecting your ability to move, work, interact with others and maintain your independence.

Summary

Amputation is the removal of a limb by trauma, medical illness, or surgery. As a surgical measure, it is used to control pain or a disease process in the affected limb, such as malignancy or gangrene. In some cases, it is carried out on individuals as a preventive surgery for such problems. A special case is that of congenital amputation, a congenital disorder, where fetal limbs have been cut off by constrictive bands. In some countries, judicial amputation is currently used to punish people who commit crimes. Amputation has also been used as a tactic in war and acts of terrorism; it may also occur as a war injury. In some cultures and religions, minor amputations or mutilations are considered a ritual accomplishment. When done by a person, the person executing the amputation is an amputator. The oldest evidence of this practice comes from a skeleton found buried in Liang Tebo cave, East Kalimantan, Indonesian Borneo dating back to at least 31,000 years ago, where it was done when the amputee was a young child.

Details

Amputation is the surgical removal of all or part of a limb or extremity such as an arm, leg, foot, hand, toe, or finger.

About 1.8 million Americans are living with amputations. Amputation of the leg -- either above or below the knee -- is the most common amputation surgery.

Reasons for Amputation

There are many reasons an amputation may be necessary. The most common is poor circulation because of damage or narrowing of the arteries, called peripheral arterial disease. Without adequate blood flow, the body's cells cannot get oxygen and nutrients they need from the bloodstream. As a result, the affected tissue begins to die and infection may set in.

Other causes for amputation may include:

* Severe injury (from a vehicle accident or serious burn, for example)

* Cancerous tumor in the bone or muscle of the limb

* Serious infection that does not get better with antibiotics or other treatment

* Thickening of nerve tissue, called a neuroma

* Frostbite

The Amputation Procedure

An amputation usually requires a hospital stay of five to 14 days or more, depending on the surgery and complications. The procedure itself may vary, depending on the limb or extremity being amputated and the patient's general health.

Amputation may be done under general anesthesia (meaning the patient is asleep) or with spinal anesthesia, which numbs the body from the waist down.

When performing an amputation, the surgeon removes all damaged tissue while leaving as much healthy tissue as possible.

A doctor may use several methods to determine where to cut and how much tissue to remove. These include:

* Checking for a pulse close to where the surgeon is planning to cut

* Comparing skin temperatures of the affected limb with those of a healthy limb

* Looking for areas of reddened skin

* Checking to see if the skin near the site where the surgeon is planning to cut is still sensitive to touch

During the procedure itself, the surgeon will:

* Remove the diseased tissue and any crushed bone

* Smooth uneven areas of bone

* Seal off blood vessels and nerves

* Cut and shape muscles so that the stump, or end of the limb, will be able to have an artificial limb (prosthesis) attached to it

RELATED:

How to Prevent Damaging Your Kidneys

The surgeon may choose to close the wound right away by sewing the skin flaps (called a closed amputation). Or the surgeon may leave the site open for several days in case there's a need to remove additional tissue.

The surgical team then places a sterile dressing on the wound and may place a stocking over the stump to hold drainage tubes or bandages. The doctor may place the limb in traction, in which a device holds it in position, or may use a splint.

Recovery From Amputation

Recovery from amputation depends on the type of procedure and anesthesia used.

In the hospital, the staff changes the dressings on the wound or teaches the patient to change them. The doctor monitors wound healing and any conditions that might interfere with healing, such as diabetes or hardening of the arteries. The doctor prescribes medications to ease pain and help prevent infection.

If the patient has problems with phantom pain (a sense of pain in the amputated limb) or grief over the lost limb, the doctor will prescribe medication and/or counseling, as necessary.

Physical therapy, beginning with gentle, stretching exercises, often begins soon after surgery. Practice with the artificial limb may begin as soon as 10 to 14 days after surgery.

Ideally, the wound should fully heal in about four to eight weeks. But the physical and emotional adjustment to losing a limb can be a long process. Long-term recovery and rehabilitation will include:

* Exercises to improve muscle strength and control

* Activities to help restore the ability to carry out daily activities and promote independence

* Use of artificial limbs and assistive devices

* Emotional support, including counseling, to help with grief over the loss of the limb and adjustment to the new body image

Additional Information:

Prevention

Methods in preventing amputation, limb-sparing techniques, depend on the problems that might cause amputations to be necessary. Chronic infections, often caused by diabetes or decubitus ulcers in bedridden patients, are common causes of infections that lead to gangrene, which, when widespread, necessitates amputation.

There are two key challenges: first, many patients have impaired circulation in their extremities, and second, they have difficulty curing infections in limbs with poor blood circulation.

Crush injuries where there is extensive tissue damage and poor circulation also benefit from hyperbaric oxygen therapy (HBOT). The high level of oxygenation and revascularization speed up recovery times and prevent infections.

A study found that the patented method called Circulator Boot achieved significant results in prevention of amputation in patients with diabetes and arteriosclerosis. Another study found it also effective for healing limb ulcers caused by peripheral vascular disease. The boot checks the heart rhythm and compresses the limb between heartbeats; the compression helps cure the wounds in the walls of veins and arteries, and helps to push the blood back to the heart.

For victims of trauma, advances in microsurgery in the 1970s have made replantations of severed body parts possible.

The establishment of laws, rules, and guidelines, and employment of modern equipment help protect people from traumatic amputations.

Prognosis

The individual may experience psychological trauma and emotional discomfort. The stump will remain an area of reduced mechanical stability. Limb loss can present significant or even drastic practical limitations.

A large proportion of amputees (50–80%) experience the phenomenon of phantom limbs; they feel body parts that are no longer there. These limbs can itch, ache, burn, feel tense, dry or wet, locked in or trapped or they can feel as if they are moving. Some scientists believe it has to do with a kind of neural map that the brain has of the body, which sends information to the rest of the brain about limbs regardless of their existence. Phantom sensations and phantom pain may also occur after the removal of body parts other than the limbs, e.g. after amputation of the breast, extraction of a tooth (phantom tooth pain) or removal of an eye (phantom eye syndrome).

A similar phenomenon is unexplained sensation in a body part unrelated to the amputated limb. It has been hypothesized that the portion of the brain responsible for processing stimulation from amputated limbs, being deprived of input, expands into the surrounding brain, such that an individual who has had an arm amputated will experience unexplained pressure or movement on his face or head.

In many cases, the phantom limb aids in adaptation to a prosthesis, as it permits the person to experience proprioception of the prosthetic limb. To support improved resistance or usability, comfort or healing, some type of stump socks may be worn instead of or as part of wearing a prosthesis.

Another side effect can be heterotopic ossification, especially when a bone injury is combined with a head injury. The brain signals the bone to grow instead of scar tissue to form, and nodules and other growth can interfere with prosthetics and sometimes require further operations. This type of injury has been especially common among soldiers wounded by improvised explosive devices in the Iraq War.

Due to technological advances in prosthetics, many amputees live active lives with little restriction. Organizations such as the Challenged Athletes Foundation have been developed to give amputees the opportunity to be involved in athletics and adaptive sports such as amputee soccer.

Nearly half of the individuals who have an amputation due to vascular disease will die within 5 years, usually secondary to the extensive co-morbidities rather than due to direct consequences of amputation. This is higher than the five year mortality rates for breast cancer, colon cancer, and prostate cancer. Of persons with diabetes who have a lower extremity amputation, up to 55% will require amputation of the second leg within two to three years.

Amputation is surgery to remove all or part of a limb or extremity (outer limbs). Common types of amputation involve:

* Above-knee amputation, removing part of the thigh, knee, shin, foot and toes.

* Below-knee amputation, removing the lower leg, foot and toes.

* Arm amputation.

* Hand amputation.

* Finger amputation.

* Foot amputation, removing part of the foot.

* Toe amputation.

Why are amputations done?

Amputation can be necessary to keep an infection from spreading through your limbs and to manage pain. The most common reason for an amputation is a wound that does not heal. Often this can be from not having enough blood flow to that limb.

After a severe injury, such as a crushing injury, amputation may be necessary if the surgeon cannot repair your limb.

You also may need an amputation if you have:

* Cancerous tumors in the limb.

* Frostbite.

* Gangrene (tissue death).

* Neuroma, or thickening of nerve tissue.

* Peripheral arterial disease (PAD), or blockage of the arteries.

* Severe injury, such as from a car accident.

* Diabetes that leads to nonhealing or infected wounds or tissue death.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2130 2024-04-25 00:10:52

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

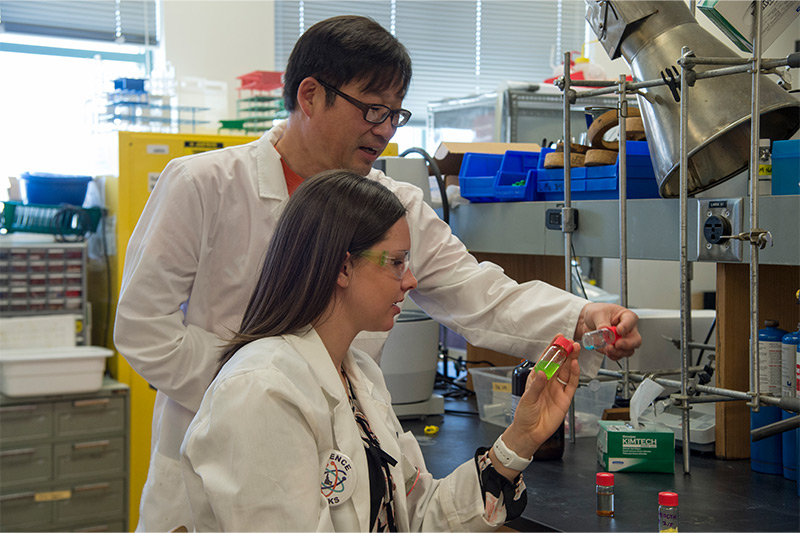

2132) Chemistry

Gist

Chemistry is a branch of natural science that deals principally with the properties of substances, the changes they undergo, and the natural laws that describe these changes.

Summary

Chemistry, the science that deals with the properties, composition, and structure of substances (defined as elements and compounds), the transformations they undergo, and the energy that is released or absorbed during these processes. Every substance, whether naturally occurring or artificially produced, consists of one or more of the hundred-odd species of atoms that have been identified as elements. Although these atoms, in turn, are composed of more elementary particles, they are the basic building blocks of chemical substances; there is no quantity of oxygen, mercury, or gold, for example, smaller than an atom of that substance. Chemistry, therefore, is concerned not with the subatomic domain but with the properties of atoms and the laws governing their combinations and how the knowledge of these properties can be used to achieve specific purposes.

The great challenge in chemistry is the development of a coherent explanation of the complex behaviour of materials, why they appear as they do, what gives them their enduring properties, and how interactions among different substances can bring about the formation of new substances and the destruction of old ones. From the earliest attempts to understand the material world in rational terms, chemists have struggled to develop theories of matter that satisfactorily explain both permanence and change. The ordered assembly of indestructible atoms into small and large molecules, or extended networks of intermingled atoms, is generally accepted as the basis of permanence, while the reorganization of atoms or molecules into different arrangements lies behind theories of change. Thus chemistry involves the study of the atomic composition and structural architecture of substances, as well as the varied interactions among substances that can lead to sudden, often violent reactions.

Chemistry also is concerned with the utilization of natural substances and the creation of artificial ones. Cooking, fermentation, glass making, and metallurgy are all chemical processes that date from the beginnings of civilization. Today, vinyl, Teflon, liquid crystals, semiconductors, and superconductors represent the fruits of chemical technology. The 20th century saw dramatic advances in the comprehension of the marvelous and complex chemistry of living organisms, and a molecular interpretation of health and disease holds great promise. Modern chemistry, aided by increasingly sophisticated instruments, studies materials as small as single atoms and as large and complex as DNA (deoxyribonucleic acid), which contains millions of atoms. New substances can even be designed to bear desired characteristics and then synthesized. The rate at which chemical knowledge continues to accumulate is remarkable. Over time more than 8,000,000 different chemical substances, both natural and artificial, have been characterized and produced. The number was less than 500,000 as recently as 1965.

Intimately interconnected with the intellectual challenges of chemistry are those associated with industry. In the mid-19th century the German chemist Justus von Liebig commented that the wealth of a nation could be gauged by the amount of sulfuric acid it produced. This acid, essential to many manufacturing processes, remains today the leading chemical product of industrialized countries. As Liebig recognized, a country that produces large amounts of sulfuric acid is one with a strong chemical industry and a strong economy as a whole. The production, distribution, and utilization of a wide range of chemical products is common to all highly developed nations. In fact, one can say that the “iron age” of civilization is being replaced by a “polymer age,” for in some countries the total volume of polymers now produced exceeds that of iron.

Details

Chemistry is the scientific study of the properties and behavior of matter. It is a physical science within the natural sciences that studies the chemical elements that make up matter and compounds made of atoms, molecules and ions: their composition, structure, properties, behavior and the changes they undergo during reactions with other substances. Chemistry also addresses the nature of chemical bonds in chemical compounds.

In the scope of its subject, chemistry occupies an intermediate position between physics and biology. It is sometimes called the central science because it provides a foundation for understanding both basic and applied scientific disciplines at a fundamental level. For example, chemistry explains aspects of plant growth (botany), the formation of igneous rocks (geology), how atmospheric ozone is formed and how environmental pollutants are degraded (ecology), the properties of the soil on the Moon (cosmochemistry), how medications work (pharmacology), and how to collect DNA evidence at a crime scene (forensics).

Chemistry has existed under various names since ancient times. It has evolved, and now chemistry encompasses various areas of specialisation, or subdisciplines, that continue to increase in number and interrelate to create further interdisciplinary fields of study. The applications of various fields of chemistry are used frequently for economic purposes in the chemical industry.

Etymology

The word chemistry comes from a modification during the Renaissance of the word alchemy, which referred to an earlier set of practices that encompassed elements of chemistry, metallurgy, philosophy, astrology, astronomy, mysticism, and medicine. Alchemy is often associated with the quest to turn lead or other base metals into gold, though alchemists were also interested in many of the questions of modern chemistry.

The modern word alchemy in turn is derived from the Arabic word al-kīmīā. This may have Egyptian origins since al-kīmīā is derived from the Ancient Greek, which is in turn derived from the word Kemet, which is the ancient name of Egypt in the Egyptian language. Alternately, al-kīmīā may derive from 'cast together'.

Modern principles

The current model of atomic structure is the quantum mechanical model. Traditional chemistry starts with the study of elementary particles, atoms, molecules, substances, metals, crystals and other aggregates of matter. Matter can be studied in solid, liquid, gas and plasma states, in isolation or in combination. The interactions, reactions and transformations that are studied in chemistry are usually the result of interactions between atoms, leading to rearrangements of the chemical bonds which hold atoms together. Such behaviors are studied in a chemistry laboratory.

The chemistry laboratory stereotypically uses various forms of laboratory glassware. However glassware is not central to chemistry, and a great deal of experimental (as well as applied/industrial) chemistry is done without it.

A chemical reaction is a transformation of some substances into one or more different substances. The basis of such a chemical transformation is the rearrangement of electrons in the chemical bonds between atoms. It can be symbolically depicted through a chemical equation, which usually involves atoms as subjects. The number of atoms on the left and the right in the equation for a chemical transformation is equal. (When the number of atoms on either side is unequal, the transformation is referred to as a nuclear reaction or radioactive decay.) The type of chemical reactions a substance may undergo and the energy changes that may accompany it are constrained by certain basic rules, known as chemical laws.

Energy and entropy considerations are invariably important in almost all chemical studies. Chemical substances are classified in terms of their structure, phase, as well as their chemical compositions. They can be analyzed using the tools of chemical analysis, e.g. spectroscopy and chromatography. Scientists engaged in chemical research are known as chemists. Most chemists specialize in one or more sub-disciplines. Several concepts are essential for the study of chemistry; some of them are:

Matter

In chemistry, matter is defined as anything that has rest mass and volume (it takes up space) and is made up of particles. The particles that make up matter have rest mass as well – not all particles have rest mass, such as the photon. Matter can be a pure chemical substance or a mixture of substances.

Atom

The atom is the basic unit of chemistry. It consists of a dense core called the atomic nucleus surrounded by a space occupied by an electron cloud. The nucleus is made up of positively charged protons and uncharged neutrons (together called nucleons), while the electron cloud consists of negatively charged electrons which orbit the nucleus. In a neutral atom, the negatively charged electrons balance out the positive charge of the protons. The nucleus is dense; the mass of a nucleon is approximately 1,836 times that of an electron, yet the radius of an atom is about 10,000 times that of its nucleus.

The atom is also the smallest entity that can be envisaged to retain the chemical properties of the element, such as electronegativity, ionization potential, preferred oxidation state(s), coordination number, and preferred types of bonds to form (e.g., metallic, ionic, covalent).

Element

A chemical element is a pure substance which is composed of a single type of atom, characterized by its particular number of protons in the nuclei of its atoms, known as the atomic number and represented by the symbol Z. The mass number is the sum of the number of protons and neutrons in a nucleus. Although all the nuclei of all atoms belonging to one element will have the same atomic number, they may not necessarily have the same mass number; atoms of an element which have different mass numbers are known as isotopes. For example, all atoms with 6 protons in their nuclei are atoms of the chemical element carbon, but atoms of carbon may have mass numbers of 12 or 13.

The standard presentation of the chemical elements is in the periodic table, which orders elements by atomic number. The periodic table is arranged in groups, or columns, and periods, or rows. The periodic table is useful in identifying periodic trends.

Compound

A compound is a pure chemical substance composed of more than one element. The properties of a compound bear little similarity to those of its elements. The standard nomenclature of compounds is set by the International Union of Pure and Applied Chemistry (IUPAC). Organic compounds are named according to the organic nomenclature system. The names for inorganic compounds are created according to the inorganic nomenclature system. When a compound has more than one component, then they are divided into two classes, the electropositive and the electronegative components. In addition the Chemical Abstracts Service has devised a method to index chemical substances. In this scheme each chemical substance is identifiable by a number known as its CAS registry number.

Molecule

A molecule is the smallest indivisible portion of a pure chemical substance that has its unique set of chemical properties, that is, its potential to undergo a certain set of chemical reactions with other substances. However, this definition only works well for substances that are composed of molecules, which is not true of many substances (see below). Molecules are typically a set of atoms bound together by covalent bonds, such that the structure is electrically neutral and all valence electrons are paired with other electrons either in bonds or in lone pairs.

Thus, molecules exist as electrically neutral units, unlike ions. When this rule is broken, giving the "molecule" a charge, the result is sometimes named a molecular ion or a polyatomic ion. However, the discrete and separate nature of the molecular concept usually requires that molecular ions be present only in well-separated form, such as a directed beam in a vacuum in a mass spectrometer. Charged polyatomic collections residing in solids (for example, common sulfate or nitrate ions) are generally not considered "molecules" in chemistry. Some molecules contain one or more unpaired electrons, creating radicals. Most radicals are comparatively reactive, but some, such as nitric oxide (NO) can be stable.

The "inert" or noble gas elements (helium, neon, argon, krypton, xenon and radon) are composed of lone atoms as their smallest discrete unit, but the other isolated chemical elements consist of either molecules or networks of atoms bonded to each other in some way. Identifiable molecules compose familiar substances such as water, air, and many organic compounds like alcohol, sugar, gasoline, and the various pharmaceuticals.

However, not all substances or chemical compounds consist of discrete molecules, and indeed most of the solid substances that make up the solid crust, mantle, and core of the Earth are chemical compounds without molecules. These other types of substances, such as ionic compounds and network solids, are organized in such a way as to lack the existence of identifiable molecules per se. Instead, these substances are discussed in terms of formula units or unit cells as the smallest repeating structure within the substance. Examples of such substances are mineral salts (such as table salt), solids like carbon and diamond, metals, and familiar silica and silicate minerals such as quartz and granite.

One of the main characteristics of a molecule is its geometry often called its structure. While the structure of diatomic, triatomic or tetra-atomic molecules may be trivial, (linear, angular pyramidal etc.) the structure of polyatomic molecules, that are constituted of more than six atoms (of several elements) can be crucial for its chemical nature.

Substance and mixture

A chemical substance is a kind of matter with a definite composition and set of properties. A collection of substances is called a mixture. Examples of mixtures are air and alloys.

Mole and amount of substance

The mole is a unit of measurement that denotes an amount of substance (also called chemical amount). One mole is defined to contain exactly 6.02214076×{10}^{23} particles (atoms, molecules, ions, or electrons), where the number of particles per mole is known as the Avogadro constant. Molar concentration is the amount of a particular substance per volume of solution, and is commonly reported in mol/{dm}^3.

Additional Information

Chemistry is the scientific study of matter, its properties, composition, and interactions. It is often referred to as the central science because it connects and bridges the physical sciences, such as physics and biology. Understanding chemistry is crucial for comprehending the world around us, from the air we breathe to the food we eat and the materials we use in everyday life.

Chemistry has many sub-disciplines such as analytical chemistry, physical chemistry, biochemistry, and more. Chemistry plays a crucial role in various industries, including pharmaceuticals, materials science, environmental science, and energy production, making it a cornerstone of modern science and technology

The area of science devoted to studying nature and also composition, properties, elements, and compounds that form matter as well as looking into their reactions forming new substances is chemistry. Chemistry has also been categorized further based on the particular areas of study.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2131 2024-04-26 00:08:30

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 48,428

Re: Miscellany

2133) Metallurgy

Gist

Metallurgy is a domain of materials science and engineering that studies the physical and chemical behavior of metallic elements, their inter-metallic compounds, and their mixtures, which are known as alloys.

Summary

Metallurgy is a domain of materials science and engineering that studies the physical and chemical behavior of metallic elements, their inter-metallic compounds, and their mixtures, which are known as alloys.

Metallurgy encompasses both the science and the technology of metals, including the production of metals and the engineering of metal components used in products for both consumers and manufacturers. Metallurgy is distinct from the craft of metalworking. Metalworking relies on metallurgy in a similar manner to how medicine relies on medical science for technical advancement. A specialist practitioner of metallurgy is known as a metallurgist.

The science of metallurgy is further subdivided into two broad categories: chemical metallurgy and physical metallurgy. Chemical metallurgy is chiefly concerned with the reduction and oxidation of metals, and the chemical performance of metals. Subjects of study in chemical metallurgy include mineral processing, the extraction of metals, thermodynamics, electrochemistry, and chemical degradation (corrosion). In contrast, physical metallurgy focuses on the mechanical properties of metals, the physical properties of metals, and the physical performance of metals. Topics studied in physical metallurgy include crystallography, material characterization, mechanical metallurgy, phase transformations, and failure mechanisms.

Historically, metallurgy has predominately focused on the production of metals. Metal production begins with the processing of ores to extract the metal, and includes the mixture of metals to make alloys. Metal alloys are often a blend of at least two different metallic elements. However, non-metallic elements are often added to alloys in order to achieve properties suitable for an application. The study of metal production is subdivided into ferrous metallurgy (also known as black metallurgy) and non-ferrous metallurgy, also known as colored metallurgy.

Ferrous metallurgy involves processes and alloys based on iron, while non-ferrous metallurgy involves processes and alloys based on other metals. The production of ferrous metals accounts for 95% of world metal production.

Modern metallurgists work in both emerging and traditional areas as part of an interdisciplinary team alongside material scientists and other engineers. Some traditional areas include mineral processing, metal production, heat treatment, failure analysis, and the joining of metals (including welding, brazing, and soldering). Emerging areas for metallurgists include nanotechnology, superconductors, composites, biomedical materials, electronic materials (semiconductors) and surface engineering. Many applications, practices, and devices associated or involved in metallurgy were established in ancient India and China, such as the innovation of the wootz steel , bronze, blast furnace, cast iron, hydraulic-powered trip hammers, and double acting piston bellows.

Details

Metallurgy is the art and science of extracting metals from their ores and modifying the metals for use. Metallurgy customarily refers to commercial as opposed to laboratory methods. It also concerns the chemical, physical, and atomic properties and structures of metals and the principles whereby metals are combined to form alloys.

History of metallurgy

The present-day use of metals is the culmination of a long path of development extending over approximately 6,500 years. It is generally agreed that the first known metals were gold, silver, and copper, which occurred in the native or metallic state, of which the earliest were in all probability nuggets of gold found in the sands and gravels of riverbeds. Such native metals became known and were appreciated for their ornamental and utilitarian values during the latter part of the Stone Age.

Earliest development

Gold can be agglomerated into larger pieces by cold hammering, but native copper cannot, and an essential step toward the Metal Age was the discovery that metals such as copper could be fashioned into shapes by melting and casting in molds; among the earliest known products of this type are copper axes cast in the Balkans in the 4th millennium BCE. Another step was the discovery that metals could be recovered from metal-bearing minerals. These had been collected and could be distinguished on the basis of colour, texture, weight, and flame colour and smell when heated. The notably greater yield obtained by heating native copper with associated oxide minerals may have led to the smelting process, since these oxides are easily reduced to metal in a charcoal bed at temperatures in excess of 700 °C (1,300 °F), as the reductant, carbon monoxide, becomes increasingly stable. In order to effect the agglomeration and separation of melted or smelted copper from its associated minerals, it was necessary to introduce iron oxide as a flux. This further step forward can be attributed to the presence of iron oxide gossan minerals in the weathered upper zones of copper sulfide deposits.

Bronze

In many regions, copper-math alloys, of superior properties to copper in both cast and wrought form, were produced in the next period. This may have been accidental at first, owing to the similarity in colour and flame colour between the bright green copper carbonate mineral malachite and the weathered products of such copper-math sulfide minerals as enargite, and it may have been followed later by the purposeful selection of math compounds based on their garlic odour when heated.

Element As contents varied from 1 to 7 percent, with up to 3 percent tin. Essentially As-free copper alloys with higher tin content—in other words, true bronze—seem to have appeared between 3000 and 2500 BCE, beginning in the Tigris-Euphrates delta. The discovery of the value of tin may have occurred through the use of stannite, a mixed sulfide of copper, iron, and tin, although this mineral is not as widely available as the principal tin mineral, cassiterite, which must have been the eventual source of the metal. Cassiterite is strikingly dense and occurs as pebbles in alluvial deposits together with math and gold; it also occurs to a degree in the iron oxide gossans mentioned above.

While there may have been some independent development of bronze in varying localities, it is most likely that the bronze culture spread through trade and the migration of peoples from the Middle East to Egypt, Europe, and possibly China. In many civilizations the production of copper, math copper, and tin bronze continued together for some time. The eventual disappearance of copper-math As is difficult to explain. Production may have been based on minerals that were not widely available and became scarce, but the relative scarcity of tin minerals did not prevent a substantial trade in that metal over considerable distances. It may be that tin bronzes were eventually preferred owing to the chance of contracting As poisoning from fumes produced by the oxidation of math-containing minerals.

As the weathered copper ores in given localities were worked out, the harder sulfide ores beneath were mined and smelted. The minerals involved, such as chalcopyrite, a copper-iron sulfide, needed an oxidizing roast to remove sulfur as sulfur dioxide and yield copper oxide. This not only required greater metallurgical skill but also oxidized the intimately associated iron, which, combined with the use of iron oxide fluxes and the stronger reducing conditions produced by improved smelting furnaces, led to higher iron contents in the bronze.

Iron

It is not possible to mark a sharp division between the Bronze Age and the Iron Age. Small pieces of iron would have been produced in copper smelting furnaces as iron oxide fluxes and iron-bearing copper sulfide ores were used. In addition, higher furnace temperatures would have created more strongly reducing conditions (that is to say, a higher carbon monoxide content in the furnace gases). An early piece of iron from a trackway in the province of Drenthe, Netherlands, has been dated to 1350 BCE, a date normally taken as the Middle Bronze Age for this area. In Anatolia, on the other hand, iron was in use as early as 2000 BCE. There are also occasional references to iron in even earlier periods, but this material was of meteoric origin.